PiVR

World Wide Series Seminar

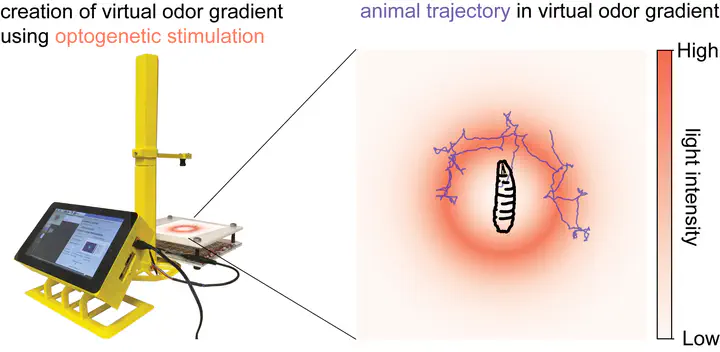

PiVR is a system that allows experimenters to immerse small animals into virtual realities. The system tracks the position of the animal and presents light stimulation according to predefined rules, thus creating a virtual landscape in which the animal can behave. By using optogenetics, we have used PiVR to present fruit fly larvae with virtual olfactory realities, adult fruit flies with a virtual gustatory reality and zebrafish larvae with a virtual light gradient.

PiVR operates at high temporal resolution (70Hz) with low latencies (<30 milliseconds) while being affordable (<US$500) and easy to build (<6 hours). Through extensive documentation (www.PiVR.org), this tool was designed to be accessible to a wide public, from high school students to professional researchers studying systems neuroscience in academia.

The project is open source (BSD-3) and the documented code written in the freely available programming language Python. We hope that PiVR will be adapted by advanced users for their particular needs, for example to create closed-loop experiments involving other sensory modalities (e.g., sound/vibration) through the use of PWM controllable devices. We envision PiVR to be used as the central module when creating virtual realities for a variety of sensory modalities. This ‘PiVR module’ takes care of detecting the animal and presenting the appropriate PWM signal that is then picked up by the PWM controllable device installed by the user, for example to produce a sound whenever an animal enters a pre-defined region.

In short, PiVR is a powerful and affordable experimental platform allowing experimenters to create a wide array of virtual reality experiments. Our hope is that PiVR will be adapted by several labs to democratize closed-loop experiments and, by standardizing image quality and the animal detection algorithm, increase reproducibility.

Project Author(s)

David Tadres; Matthieu Louis

Project Links

Project Video

This post was automatically generated by David Tadres